Repurposing Pre-trained Video Diffusion Models for Event-based Video Interpolation

Conference on Computer Vision and Pattern Recognition (CVPR) — 2025

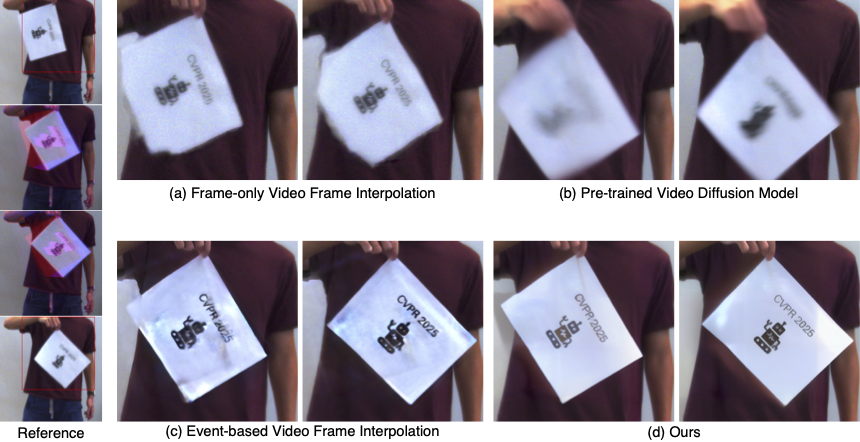

Video Frame Interpolation aims to recover realistic missing frames between observed frames, generating a high-frame-rate video from a low-frame-rate video. However, without additional guidance, large motion between frames makes this problem ill-posed. Event-based Video Frame Interpolation (EVFI) addresses this challenge by using sparse, high-temporal-resolution event measurements as motion guidance. This guidance allows EVFI methods to significantly outperform frame-only methods. However, to date, EVFI methods have relied upon a limited set of paired event-frame training data, severely limiting their performance and generalization capabilities. In this work, we overcome the limited data challenge by adapting pre-trained video diffusion models trained on internet-scale datasets to EVFI. We experimentally validate our approach on real-world EVFI datasets, including a new one we introduce. Our method outperforms existing methods and generalizes across cameras far better than existing approaches.

@article{ Chen2025RE-VDM,

author = { Chen, Jingxi and Feng, Brandon Y. and Cai, Haoming and Wang, Tianfu and Burner, Levi and Yuan, Dehao and Fermuller, Cornelia and Metzler, Christopher A. and Aloimonos, Yiannis },

title = { Repurposing Pre-trained Video Diffusion Models for Event-based Video Interpolation },

journal = { Conference on Computer Vision and Pattern Recognition (CVPR) },

year = { 2025 },

}